Hi, I’m Corentin, a Research Engineer at Huawei Noah's Ark Lab Paris, working on LLMs, AutoML and RL under the supervision of Balázs Kégl.

Prior to that, I was a Research Engineer at Inria in the Flowers lab, where I developed multi-agent systems, supervised by Clément Moulin Frier. During my MSc in Computer and Cognitive Sciences at ENSC, I also interned at Connectiv-IT as a Data Scientist, and at Inria Flowers doing Reinforcement Learning research.

Among a lot of other things, I love sports and play volley-ball at a national level (bronze medal in the 2023 French University Championship!).

Contact: corentin.lger@gmail.com

Research

You can check my Google Scholar for more details about the publications.

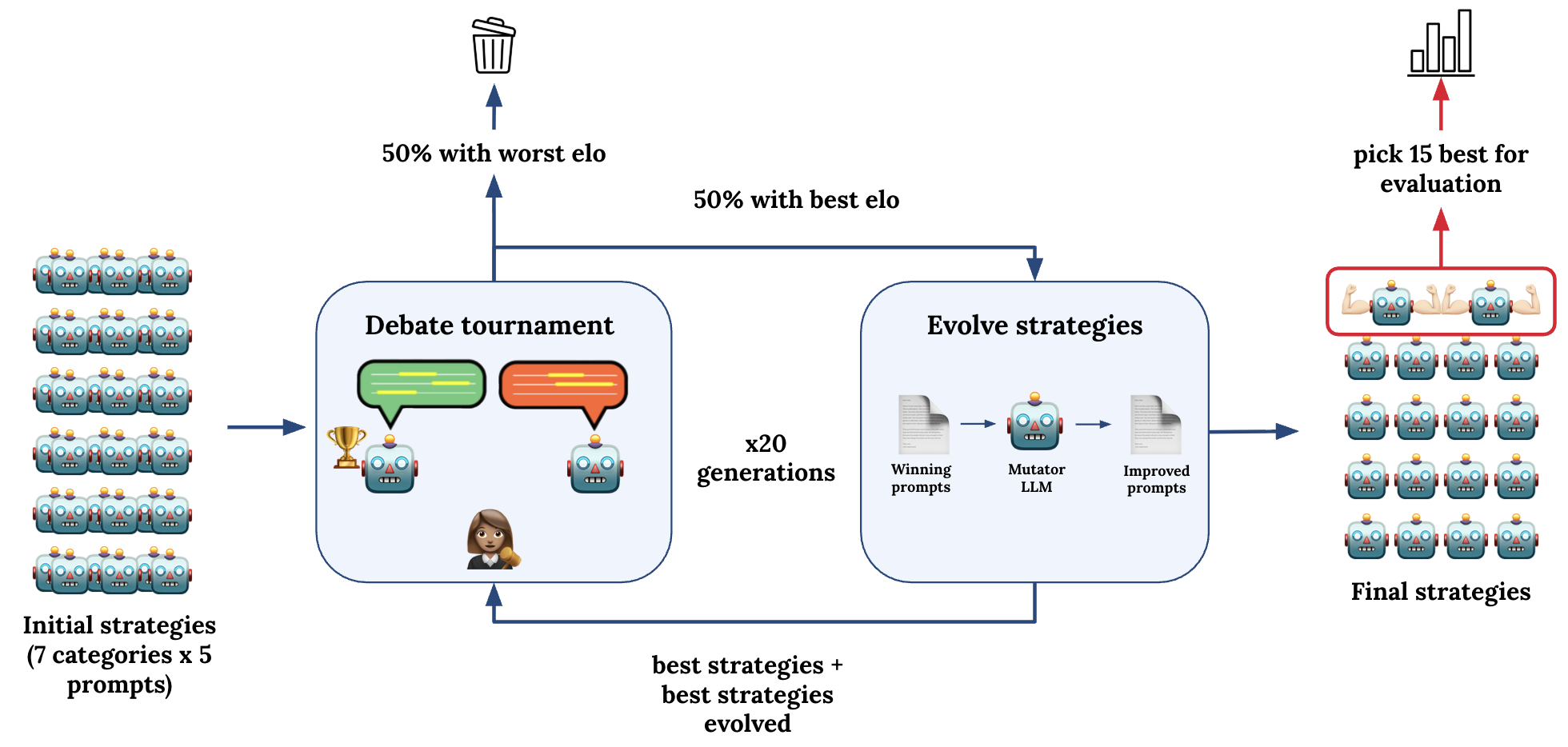

Optimizing for Persuasion Improves LLM Generalization: Evidence from Quality-Diversity Evolution of Debate Strategies

A J Reedi,

C Léger,

J Pourcel,

L Gaven,

Perrine Charriau,

G Pourcel

MTI-LLM @ NeurIPS, 2025

@article{reedi2025optimizing,

title={Optimizing for Persuasion Improves LLM Generalization: Evidence from Quality-Diversity Evolution of Debate Strategies},

author={Reedi, Aksel Joonas and L{\'e}ger, Corentin and Pourcel, Julien and Gaven, Loris and Charriau, Perrine and Pourcel, Guillaume},

journal={arXiv preprint arXiv:2510.05909},

year={2025}

}

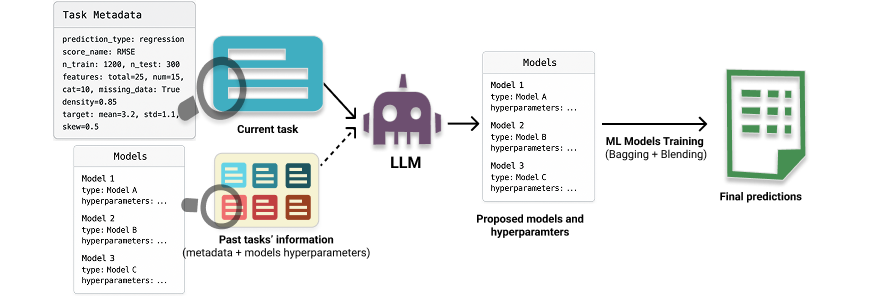

In-Context Meta-Learning with Large Language Models for Automated Model and Hyperparameter Selection

Y Attia El Hili,

A Thomas,

A Benechehab,

C Léger,

C Ancourt,

B Kégl

LLM-eval @ NeurIPS, 2025

@inproceedings{el2025context,

title={In-Context Meta-Learning with Large Language Models for Automated Model and Hyperparameter Selection},

author={El Hili, Youssef Attia and Thomas, Albert and Benechehab, Abdelhakim and L{\'e}ger, Corentin and Ancourt, Corinne and K{\'e}gl, Bal{\'a}zs},

booktitle={NeurIPS 2025 Workshop on Evaluating the Evolving LLM Lifecycle: Benchmarks, Emergent Abilities, and Scaling}

}

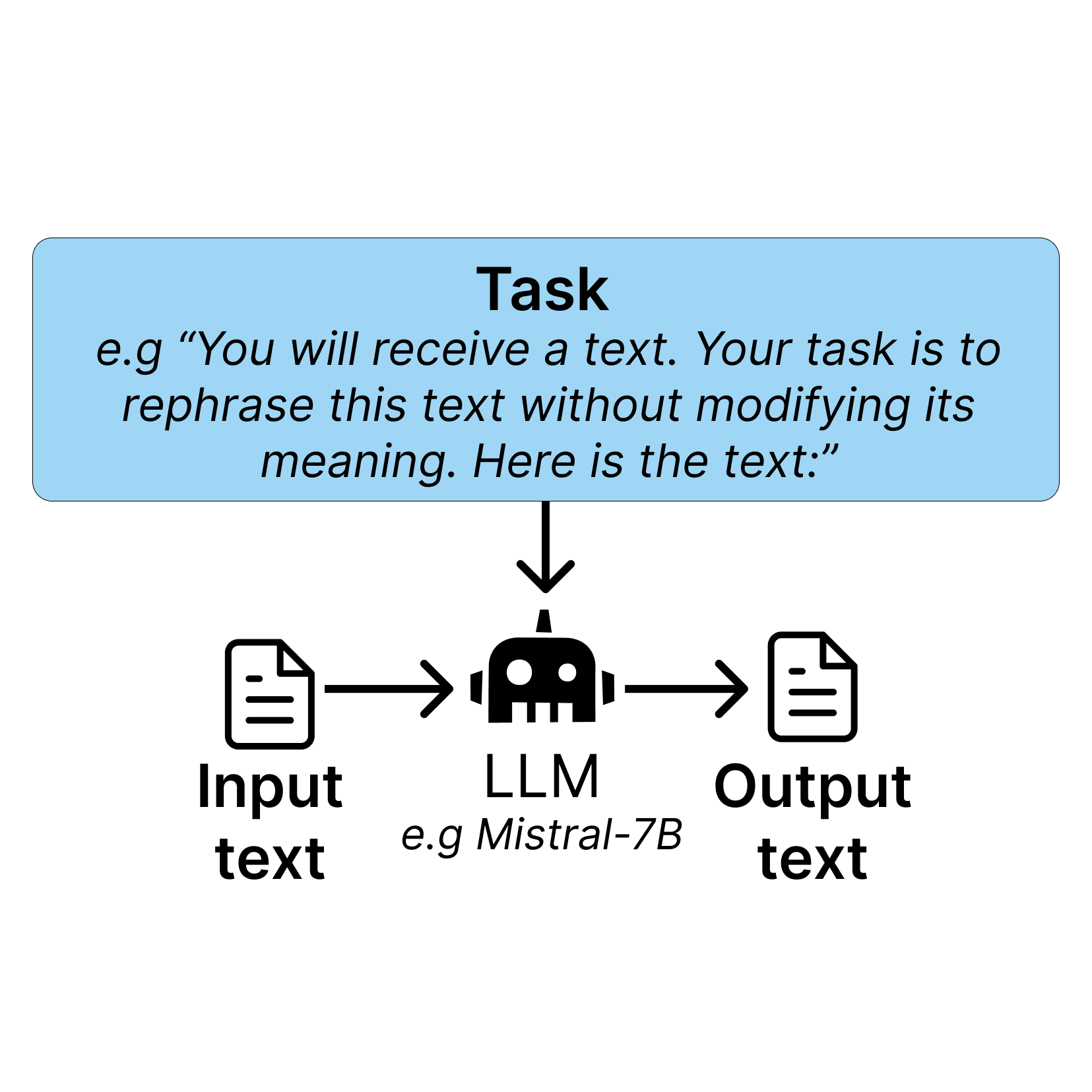

When LLMs Play the Telephone Game: Cumulative Changes and Attractors in Iterated Cultural Transmissions

J Perez,

G Kovač,

C Léger,

C Colas,

G Molinaro,

M Derex,

PY Oudeyer,

C Moulin-Frier

ICLR, 2025

@inproceedings{perez2025llms,

title={When LLMs play the telephone game: Cultural attractors as conceptual tools to evaluate LLMs in multi-turn settings},

author={Perez, J{\'e}r{\'e}my and Kova{\v{c}}, Grgur and L{\'e}ger, Corentin and Colas, C{\'e}dric and Molinaro, Gaia and Derex, Maxime and Oudeyer, Pierre-Yves and Moulin-Frier, Cl{\'e}ment},

booktitle={The Thirteenth International Conference on Learning Representations},

year={2025}

}

Cultural evolution in populations of Large Language Models

J Perez,

C Léger,

M Ovando-Tellez,

C Foulon,

J Dussauld,

PY Oudeyer,

C Moulin-Frier

arXiv, 2024

@article{perez2024cultural,

title={Cultural evolution in populations of Large Language Models},

author={Perez, J{\'e}r{\'e}my and L{\'e}ger, Corentin and Ovando-Tellez, Marcela and Foulon, Chris and Dussauld, Joan and Oudeyer, Pierre-Yves and Moulin-Frier, Cl{\'e}ment},

journal={arXiv preprint arXiv:2403.08882},

year={2024}

}

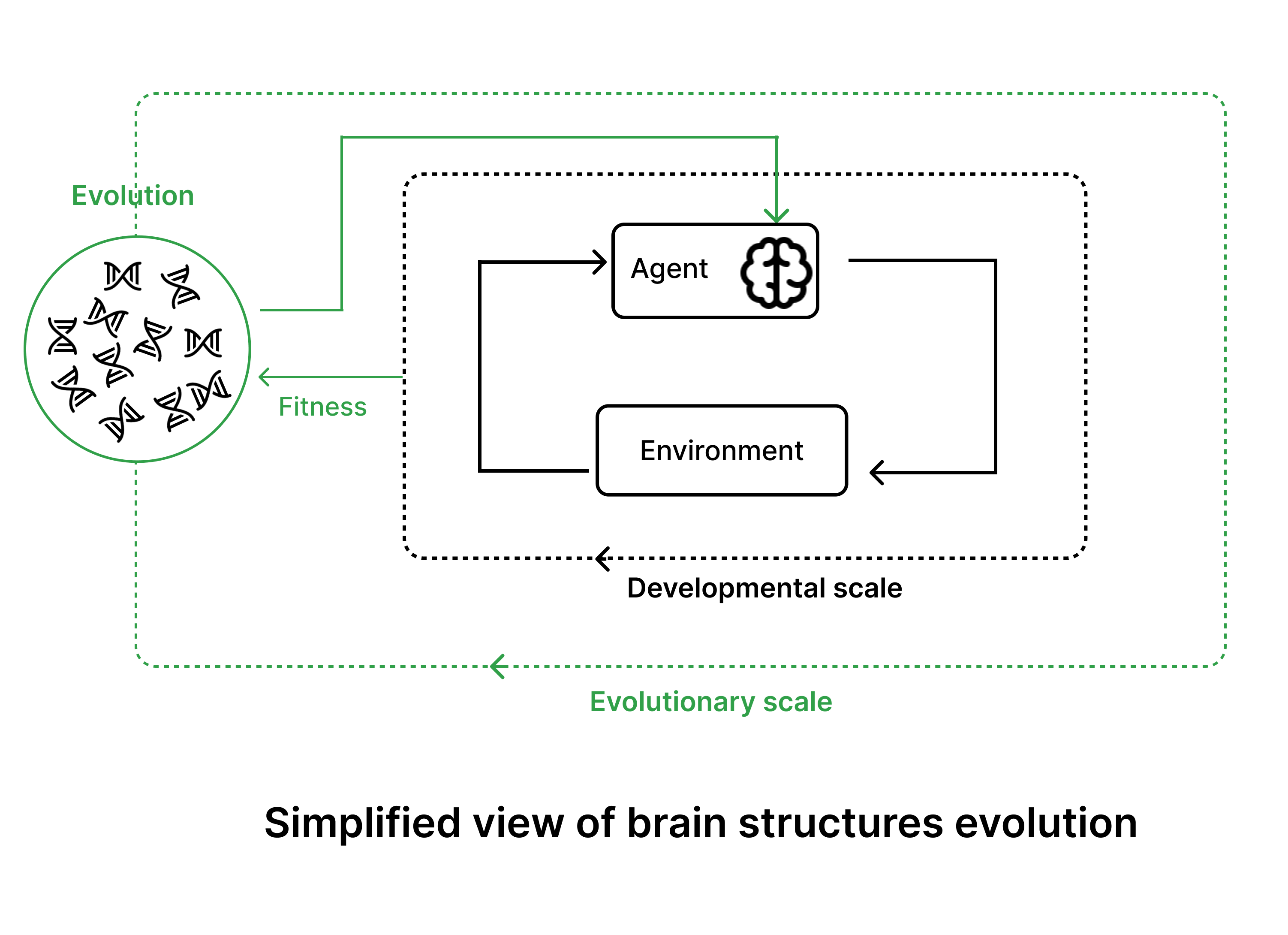

Evolving reservoirs for Meta Reinforcement Learning

*C Léger,

*G Hamon,

E Nisioti,

X Hinaut,

C Moulin-Frier

EvoStar [Long Talk], 2024

@inproceedings{leger2024evolving,

title={Evolving Reservoirs for Meta Reinforcement Learning},

author={L{\'e}ger, Corentin and Hamon, Gautier and Nisioti, Eleni and Hinaut, Xavier and Moulin-Frier, Cl{\'e}ment},

booktitle={International Conference on the Applications of Evolutionary Computation (Part of EvoStar)},

pages={36--60},

year={2024},

organization={Springer}

}

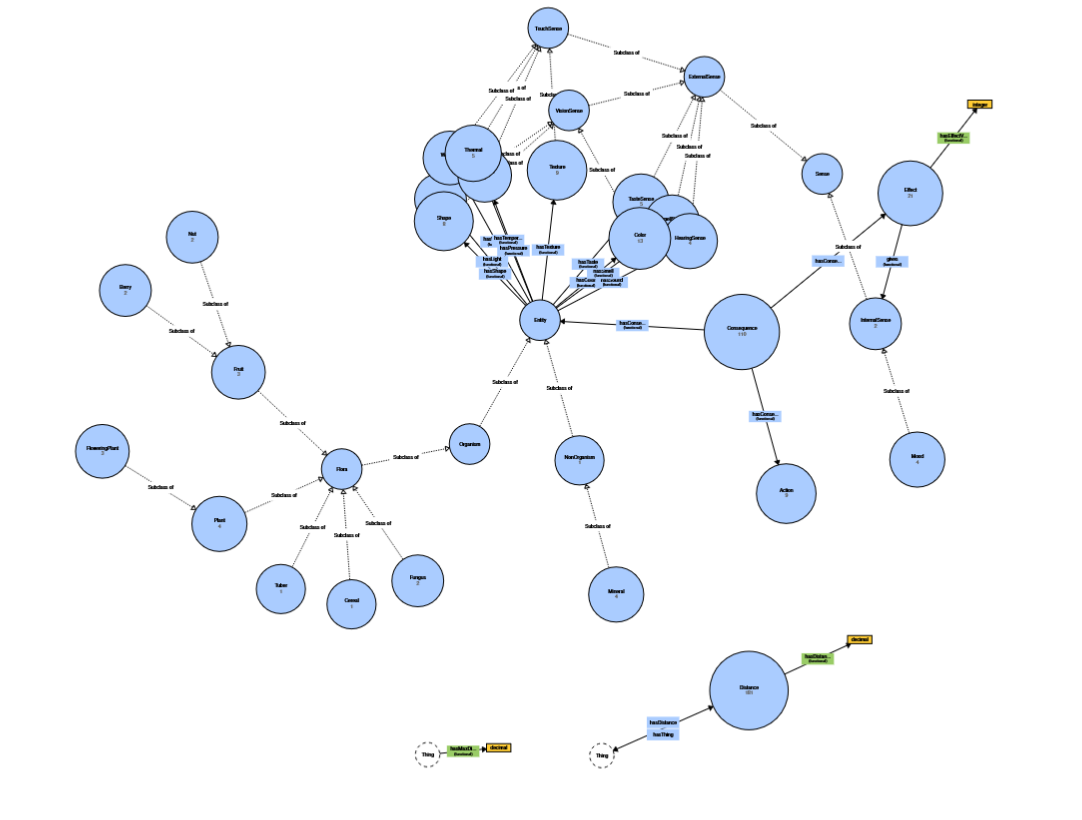

Early Empirical Results on Reinforcement Symbolic Learning

W Radji,

C Léger,

L Bardisbanian

HAL Inria, 2023

Open Source

Here is a list of open source projects I contributed to, you can check my GitHub profile for more details.

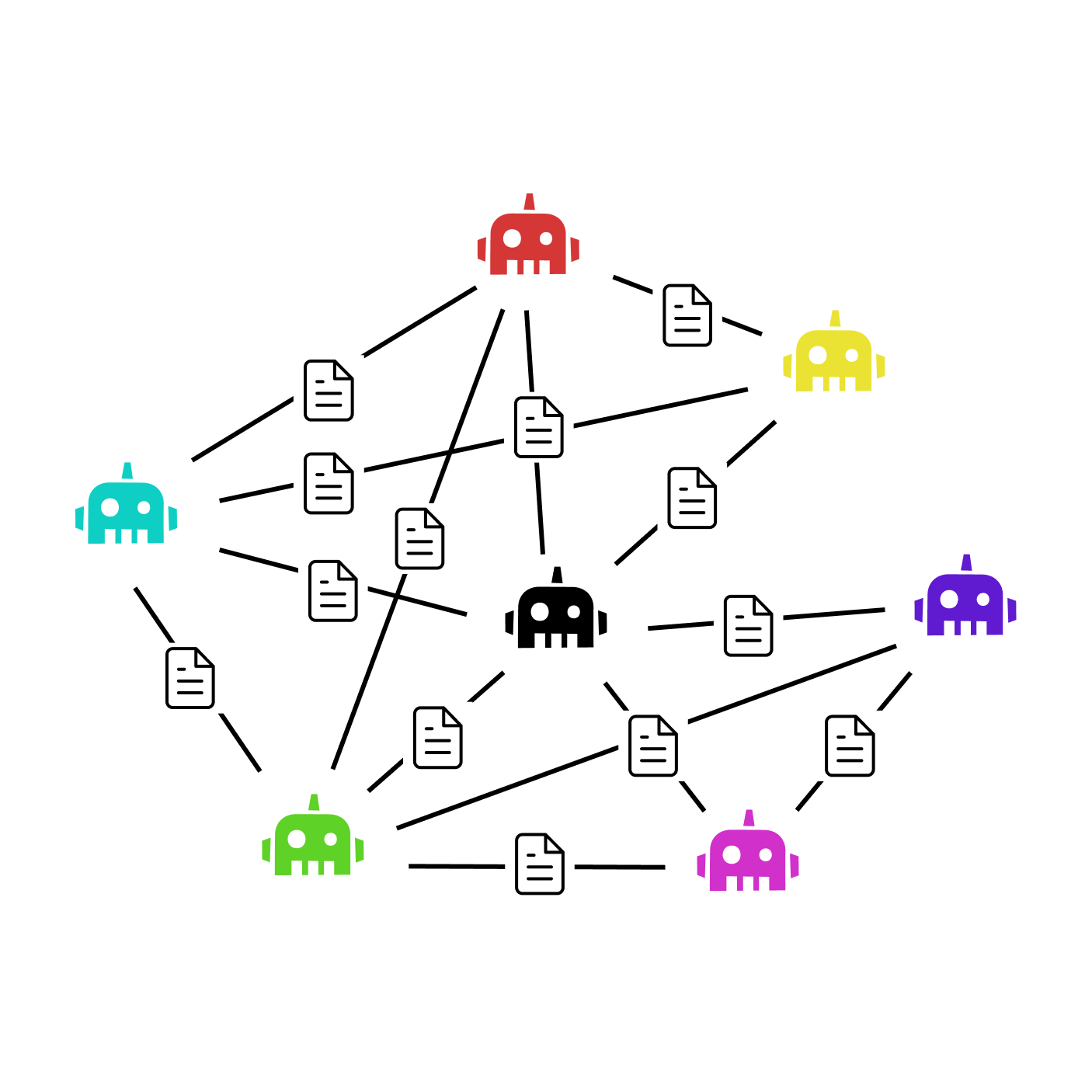

LLM-Culture Star : Simulating text evolution in networks of LLMs. see more

I also fixed a few issues in the Stable-Baselines3 Reinforcement Learning library, and created a tutorial for parallelized hyperparameter search on remote clusters in ReservoirPy.

Teaching

Hackathons

🧠 Hack1Robo 2024 (first place): Optimized persuasion skills of LLMs in debate tournaments via prompt evolution. Used a Quality Diversity method to evolve the strategies of debaters LLMs.

🤖 Hugging Face LeRobot: Assembled a robotic arm and created a real-world RL environment for objects manipulation. Trained the robotic arm using both Behavioral cloning and online Reinforcement Learning.

📚 Hack1Robo 2023: Simulated text evolution in populations of LLMs, and analyze the resulting dynamics (inspired by works in cultural evolution). This later led to the publication of 2 papers.

🧬 Inria Hackatech 2023: Optimized multi-LLM agent systems strategies via prompt evolution. Reached GPT-4 level on math tasks with evolved systems of GPT-3.5 agents. This led to a startup creation: Ebiose.